Microsoft introduced a small language model Phi-4 In December of last year. The said small language model came with state-of-the-art features and high performance. Now, the Redmond based tech giant introduced two more small language models, Phi-4-multimodal and Phi-4-mini. Phi-4-multimodal aids speech, vision, text all at the same time whereas Phi4-mini is dedicated to text-based tasks.

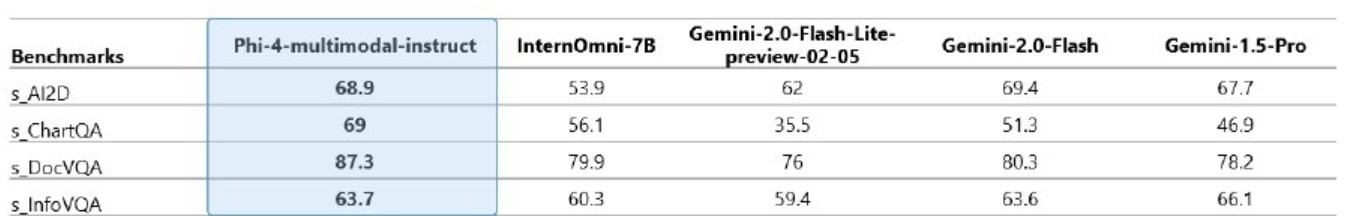

Phi-4-multimodal is a 5.6B parameter model that flawlessly inculcates speech, vision, and text processing into a single, unified architecture. When compared to other existing state-of-the-art omni models, Phi-4-multimodal achieves better and seamless performance when tested against multiple benchmarks. Check out the table below:

Phi-4-multimodal successfully outdid specialized speech models like WhisperV3 and SeamlessM4T-v2-Large in both automatic speech recognition (ASR) and speech translation (ST). According to Microsoft, this model secured the top position on the Hugging Face OpenASR leaderboard with an impressive word error rate of 6.14%. Besides, Phi-4 multimodal secured super performance in vision related tasks in Mathematics and science. This model matched popular models like Gemini-2-Flash and Claude-3.5-Sonnet in multimodal tasks such as document and chart interpretation.

Both Phi-4-multimodal and Phi-4-mini models are now available for developers in Azure AI Foundry, Hugging Face, and the NVIDIA API Catalog. You can check the technical paper to see an outline of recommended models uses and their limitations.